Brain Buys (19 page)

Authors: Dean Buonomano

There are two main causes of fear-related brain bugs. First, the genetic subroutines that determine what we are hardwired to fear were not only written for a different time and place, but also much of the code was written for a different species altogether. Our archaic neural operating system never received the message that predators and strangers are no longer as dangerous as they once were, and that there are more important things to fear. We can afford to fear predators, poisonous creatures, and people different from us less; and focus more on eliminating poverty, curing diseases, developing rational defense policies, and protecting the environment.

The second cause of our fear-related brain bugs is that we are all too well prepared to learn to fear through observation. Observational learning evolved before the emergence of language, writing, TV, and Hollywood—before we were able to learn about things that happened in another time and place, or see things that never even happened in the real world. Because vicarious learning is in part unconscious, it seems to be partially resistant to reason and ill-prepared to distinguish fact from fiction. Furthermore, modern technology brings with it the ability to show people the same frightening event over and over again, presumably creating an amplified and overrepresented account of that event within our neural circuits.

One of the consequences of our genetic baggage is that like the monkeys that are innately prepared to jump to conclusions about the danger posed by snakes, with minimal evidence we are ready and willing to jump to conclusions about the threat posed by those not from our tribe or nation. Tragically, this propensity is self-fulfilling: mutual fear flames mutual aggression, which in turn warrants mutual fear. However, as we develop a more intimate understanding of the neural mechanisms of fear and its bugs we will learn to better discriminate between the prehistoric whispers of our genes and the threats that are genuinely more likely to endanger our well-being.

Unreasonable Reasoning

Intuition can sometimes get things wrong. And intuition is what people use in life to make decisions.

—Mark Haddon,

The Curious Incident of the Dog in the Night-Time

In the 1840s, in some hospitals, 20 percent of women died after childbirth. These deaths were almost invariably the result of puerperal fever (also called childbed fever): a disease characterized by fever, pus-filled skin eruptions, and generalized infection of the respiratory and urinary tracts. The cause was largely a mystery, but a few physicians in Europe and the United States hit upon the answer. One of them was the Hungarian doctor Ignaz Semmelweis. In 1846 Semmelweis noted that in the First Obstetric Clinic at the Viennese hospital, where doctors and students delivered babies, 13 percent of mothers died in the days following delivery (his carefully kept records show that on some months the rate was as high as 30 percent). However, in the Second Obstetric Clinic of the same hospital, where midwives delivered babies, the death rate was closer to 2 percent.

As the author Hal Hellman recounts: “Semmelweis began to suspect the hands of the students and the faculty. These he realized, might go from the innards of a pustulant corpse almost directly into a woman’s uterus.”

1

Semmelweis tested his hypothesis by instituting a strict policy regarding cleanliness and saw the puerperal fever rates plummet. Today his findings are considered to be among the most important in medicine, but two years after his initial study his strategy had still not been implemented in his own hospital. Semmelweis was not able to renew his appointment, and he was forced to leave to start a private practice. Although a few physicians rapidly accepted Semmelweis’s ideas, he and others were largely ignored for several more decades, and, by some estimates, 20 percent of the mothers in Parisian hospitals died after delivery in the 1860s. It was only in 1879 that the cause of puerperal fever was largely settled by Louis Pasteur.

Why were Semmelweis’s ideas ignored for decades?

2

The answer to this question is still debated. One factor was clearly that the notion of tiny evil life-forms, totally invisible to the eye, wreaking such havoc on the human body was so alien to people that it was considered preposterous. It has also been suggested that Semmelweis’s theory carried emotional baggage that biased physicians’ judgments: it required a doctor to accept that he himself had been an agent of death, infecting young mothers with a deadly disease. At least one physician at the time is reported to have committed suicide after coming to terms with what we know today as germ theory. There are undoubtedly many reasons germ theory was not readily embraced, but they are mostly the result of the amalgam of unconscious and irrational forces that influence our rational decisions.

COGNITIVE BIASES

The history of science, medicine, politics, and business is littered with examples of obstinate adherence to old customs, irrational beliefs, ill-conceived policies, and appalling decisions. Similar penchants are also observable in the daily decisions of our personal and professional lives. The causes of our poor decisions are complex and multifactorial, but they are in part attributable to the fact that human cognition is plagued with blind spots, preconceived assumptions, emotional influences, and built-in biases.

We are often left with the indelible impression that our decisions are the product of conscious deliberation. It is equally true, however, that like a press agent forced to come up with a semirational explanation for the appalling behavior of his client, our conscious mind is often justifying decisions that have already been made by hidden forces. It is impossible to fully grasp the sway of these forces on our decisions; however, the persuasiveness of the unconscious is well illustrated by the sheer disconnect between conscious perception and reality that arises from sensory illusions.

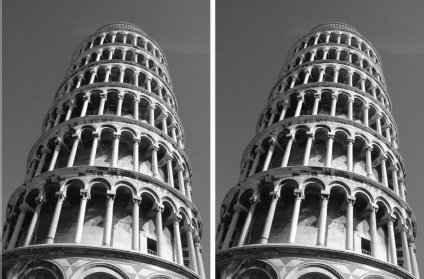

Both images of the Leaning Tower of Pisa shown in Figure 6.1 are exactly the same, yet the one on the right appears to be leaning more. The illusion is nonnegotiable; although I have seen it dozens of times, I still find it hard to believe that these are the same image. (The first time I saw it, I had to cut out the panel on the right and paste it on the left). The illusion is a product of the assumptions the visual system makes about perspective. When parallel lines, such as those of railroad tracks, are projected onto your retina, they converge as they recede into the distance (because the angle between the two rails progressively decreases). It is because your brain has learned to use this convergence to make inferences about distance that one can create perspective by simply drawing two converging lines on a piece of paper. The picture in the illusion was taken from the perspective of the bottom of the building, and since the lines of the tower do not converge in the distance (height in this case), the brain interprets this as meaning that the towers are not parallel, and creates the illusion of divergence.

3

Another well-known visual illusion occurs when you stare unwaveringly at a waterfall for 30 seconds or so, and then shift your gaze to look at unmoving rocks: the rocks appear to be rising. This is because motion is detected by different populations of neurons in the brain: “down” neurons fire in response to downward motion, and “up” neurons to upward movement. The perception of whether something is moving up or down is a result of the difference in the activity between these opposing populations of neurons—a tug-of-war between the up and down neurons. Even in the absence of movement these two populations have some level of spontaneous activity, but the competition between them is balanced. During the 30 seconds of constant stimulation created by the waterfall, the downward-moving neurons essentially get “tired” (they adapt). So when you view the stationary rocks, the normal balance of power has shifted, and the neurons that detect upward motion have a temporary advantage, creating illusory upward motion.

Figure 6.1 The leaning tower illusion: The same exact picture of the Leaning Tower of Pisa is shown in both panels, yet the one on the right appears to be leaning more. (From [Kingdom et al., 2007].)

Visual perception is a product of both experience and the computational units used to build the brain. The leaning tower illusion is a product of experience, of unconsciously learned inferences about angles, lines, distance, and two-dimensional images. The waterfall illusion is a product of built-in properties of neurons and neural circuits. And for the most part conscious deliberation does not enter into the equation: no matter how much I consciously insist the two towers are parallel, one tower continues to lean more than the other. Conscious deliberation, together with the unconscious traces of our previous experiences and the nature of the brain’s hardware, contribute to the decisions we make. Most of the time these multiple components collaborate to conjure decisions that are well suited to our needs; however, like visual perception, “illusions” or biases sometimes arise.

Consider the subjective decision of whether you like something, such as a painting, a logo, or a piece of jewelry. What determines whether you find one painting more pleasing than another? For anybody who has “grown” to like a song, it comes as no surprise that we tend to prefer things that we are familiar with. Dozens of studies have confirmed that mere exposure to something, whether it’s a face, image, word, or sound, makes it more likely that people will later find it to be appealing.

4

This familiarity bias for preferring things we are acquainted with is exploited in marketing; by repetitive exposure through ads we become familiar with a company’s product. The familiarity bias also seems to hold true for ideas. Another rule of thumb in decision making is “when in doubt, do nothing,” sometimes referred to as the status quo bias. One can imagine that the familiarity and status quo biases contributed to the rejection of Semmelweis’s ideas. Physicians resisted the germ theory in part because it was unfamiliar and ran against the status quo.

Cognitive psychologists and behavioral economists have described a vast catalogue of cognitive biases over the past decades such as framing, loss aversion, anchoring, overconfidence, availability bias, and many others.

5

To understand the consequences and causes of these cognitive biases, we will explore a few of the most robust and well studied.

Framing and Anchoring

The cognitive psychologists Daniel Kahneman and Amos Tversky were among the most vocal whistleblowers when it came to exposing the flaws and foibles of human decision-making. Their research established the foundations of what is now known as behavioral economics. In recognition of their work Daniel Kahneman received a Nobel Memorial Prize in Economics in 2002 (Amos Tversky passed away in 1996). One of the first cognitive biases they described demonstrated that the way in which a question is posed—the manner in which it is “framed”—can influence the answer.

In one of their classic framing studies, Kahneman and Tversky presented subjects with a scenario in which an outbreak of a rare disease was expected to kill 600 people.

6

Two alternative programs to combat the outbreak were proposed, and the subjects were asked to choose between them:

(A)

If program A is adopted, 200 people will be saved.

(B)

If program B is adopted, there is a 1/3 probability that 600 people will be saved, and a 2/3 probability that no people will be saved.

In other words, the choice was between a sure outcome in which 200 people will be saved and a possible outcome in which everyone or nobody will be saved. (Note that if option B were exerted time and time again it too would, on average, save 200 people.) There is no right or wrong answer here, just different approaches to a dire scenario.

They found that 72 percent of the subjects in the study chose to definitely save 200 lives (option A), and 28 percent chose to gamble in the hope of saving everyone (option B). In the second part of the study they presented a different group of subjects with the same choices, but worded them differently.

(A)

If program A is adopted, 400 people will die.

(B)

If program B is adopted, there is a 1/3 probability that nobody will die, and a 2/3 probability that 600 people will die.

Options A and B are exactly the same in both parts of the study, the only difference is in the wording. Yet, Tversky and Kahneman found that when responding to the second set of choices, people’s decisions were completely reversed: now the great majority of subjects, 78 percent, chose option B, in contrast to the 28 percent who chose option B in response to the first set of choices. In other words, framing the options in terms of 400 deaths out of 600 rather than 200 survivors out of 600 induced people to favor the option that amounted to a gamble; definitely saving 33 percent of the people was an acceptable outcome, but definitely losing 67 percent of the people was not.

Framing effects have been replicated many times, including in studies that have taken place while subjects are in a brain scanner. One such study consisted of multiple rounds of gambling decisions, each of which started with the subject receiving a specific amount of money.

7

In one round, for example, subjects were given $50 and asked to decide between the following two choices:

(A)

Keep $30.

(B)

Take a gamble, with a 50/50 chance of keeping or losing the full $50.

The subjects did not actually keep or lose the proposed amounts of money, but they had a strong incentive to perform their best because they were paid for their participation in proportion to their winnings. Given these two options subjects chose to gamble (option B) 43 percent of the time. When option A was reworded and the options posed to the same individuals as

(A)

Lose $20.

(B)

Take a gamble with a 50/50 chance of keeping or losing the full $50.

With option A now framed as a loss, people chose to gamble 62 percent of the time. Although keeping $30 or losing $20 out of $50 is the same thing, framing the question in terms of a loss made the risk of losing the full $50 seem more acceptable. Out of the 20 subjects in the study, every one of them decided to gamble more when the propositions were posed as a loss. Clearly, any participant who claimed his decision was based primarily on a rational analysis would be gravely mistaken.

Although all subjects gambled more in the “lose” compared to the “keep” frame, there was considerable individual variability: some only gambled a little more often when option A was posed as a loss, while others gambled a lot more. We can say that the individuals who gambled only a bit more in the “lose” frame behaved more rationally since their decisions were only weakly influenced by the wording of the question. Interestingly, there was a correlation between the degree of “rationality” and activity in an area of the prefrontal cortex (the orbitofrontal cortex) across subjects. This is consistent with the general notion that the prefrontal areas of the brain play an important role in rational decision-making.