Modern Mind: An Intellectual History of the 20th Century (130 page)

Read Modern Mind: An Intellectual History of the 20th Century Online

Authors: Peter Watson

Tags: #World History, #20th Century, #Retail, #Intellectual History, #History

Among professional economists, J. K. Galbraith is sometimes disparaged as a fellow professional much less influential with his colleagues than among the general public. That is to do him a disservice. In his many books he has used his ‘insider’ status as a trained economist to make some uncomfortable observations on the changing nature of society and the role economics plays in that change. Despite the fact that Galbraith was born in 1908, this characteristic showed no sign of flagging in the last decade of the century, and in 1992 he

published

The Culture of Contentment

and, four years later,

The Good Society: The Human Agenda,

his eighteenth and twentieth books. (There was another,

Name Dropping,

a memoir, in 1999.)

The Culture of Contentment

is a deliberate misnomer. Galbraith is using irony here, irony little short of sarcasm.

26

What he really means is the culture of smugness. His argument is that until the mid-1970s, round about the oil crisis, the Western democracies accepted the idea of a mixed economy, and with that went economic social progress. Since then, however, a prominent class has emerged, materially comfortable and even very rich, which, far from trying to help the less fortunate, has developed a whole infrastructure – politically and intellectually – to marginalise and even demonise them. Aspects of this include tax reductions to the better off and welfare cuts to the worse off, small, ‘manageable wars’ to maintain the unifying force of a common enemy, the idea of ‘unmitigated laissez-faire as the embodiment of freedom,’ and a desire for a cutback in government. The more important collective end result of all this, Galbraith says, is a blindness and a deafness among the ‘contented’ to the growing problems of society. While they are content to spend, or have spent in their name, trillions of dollars to defeat relatively minor enemy figures (Gaddafi, Noriega, Milosevic), they are extremely unwilling to spend money on the underclass nearer home. In a startling paragraph, he quotes figures to show that ‘the number of Americans living below the poverty line increased by 28 per cent in just ten years, from 24.5 million in 1978 to 32 million in 1988. By then, nearly one in five children was born in poverty in the United States, more than twice as high a proportion as in Canada or Germany.’

27

Galbraith reserves special ire for Charles Murray. Murray, a Bradley Fellow at the American Enterprise Institute, a right-wing think tank in Washington, D.C., produced a controversial but well-documented book in 1984 called

Losing Ground.

28

This examined American social policy from 1950 to 1980 and took the position that, in fact, in the 1950s the situation of blacks in America was fast improving, that many of the statistics that showed they were discriminated against actually showed no such thing, rather that they were poor, that a minority of blacks pulled ahead of the rest as the 1960s and 1970s passed, while the bulk remained behind, and that by and large the social initiatives of the Great Society not only failed but made things worse because they were, in essence, fake, offering fake incentives, fake curricula in schools, fake diplomas in colleges, which changed nothing. Murray allied himself with what he called ‘the popular wisdom,’ rather than the wisdom of intellectuals or social scientists. This popular wisdom had three core premises: people respond to incentives and disincentives – sticks and carrots work; people are not inherently hardworking or moral – in the absence of countervailing influences, people will avoid work and be amoral; people must be held responsible for their actions – whether they

are

responsible in some ultimate philosophical or biochemical sense cannot be the issue if society is to function.

29

His charts, showing for instance that black entry into the labour force was increasing steadily in the 1955–80 period, or that black wages were rising, or that entry of black children into schools increased, went against the grain of the prevailing (expert) wisdom of the time,

as did his analysis of illegitimate births, which showed that there was partly an element of ‘poor’ behaviour in the figures and partly an element of ‘race.’

30

But overall his message was that the situation in America in the 1950s, though not perfect, was fast improving and ought to have been left alone, to get even better, whereas the Great Society intervention had actually made things worse.

For Galbraith, Murray’s aim was clear: he wanted to get the poor off the federal budget and tax system and ‘off the consciences of the comfortable.’

31

He confirmed this theme in

The Good Society

(1996). Galbraith could never be an ‘angry’ writer; he is almost Chekhovian in his restraint. But in

The Good Society,

no less than in

The Culture of Contentment,

his contempt for his opponents is there, albeit in polite disguise. The significance of

The Good Society,

and what links many of the ideas considered in this chapter, is that it is a book by an economist in which economics is presented as the

servant

of the people, not the engine.

32

Galbraith’s agenda for the good society is unashamedly left of centre; he regards the right-wing orthodoxies, or would-be orthodoxies, of 1975–90, say, as a mere dead end, a blind alley. It is now time to get back to the real agenda, which is to re-create the high-growth, low-unemployment, low-inflation societies of the post-World War II era, not for the sake of it, but because they were more civilised times, producing social and moral progress before a mini-Dark Age of selfishness, greed, and sanctimony.

33

It is far from certain that Galbraith was listened to as much as he would have liked, or as much as he would have been earlier. Poverty, particularly poverty in the United States, remained a ‘hidden’ issue in the last years of the century, seemingly incapable of moving or shaking the contented classes.

The issue of race was more complicated. It was hardly an ‘invisible’ matter, and at a certain level – in the media, among professional politicians, in literature – the advances made by blacks and other minorities were there for all to see. And yet in a mass society, the mass media are very inconsistent in the picture they paint. In mass society the more profound truths are often revealed in less compelling and less entertaining forms, in particular through statistics. It is in this context that Andrew Hacker’s

Two Nations: Black and White, Separate, Hostile, Unequal,

published in 1992, was so shattering.

34

It returns us not only to the beginning of this chapter, and the discussion of rights, but to the civil rights movement, to Gunnar Myrdal, Charles Johnson, and W. E. B. Du Bois. Hacker’s message was that some things in America have not changed.

A professor of political science at Queen’s College in New York City, Andrew Hacker probably understands the U.S. Census figures better than anyone outside the government; and he lets the figures lead his argument. He has been analysing America’s social and racial statistics for a number of years, and is no firebrand but a reserved, even astringent academic, not given to hyperbole or rhetorical flourishes. He publishes his startling (and stark) conclusions mainly in the

New York Review of Books,

but

Two Nations

was more chilling than anything in the

Review.

His argument was so shocking that Hacker and his editors apparently felt the need to wrap his central chapters behind

several ‘softer’ introductory chapters that put his figures into context, exploring and seeking to explain racism and the fact of being black in an anecdotal way, to prepare the reader for what was to come. The argument was in two parts. The figures showed not only that America was still deeply divided, after decades – a century – of effort, but that in many ways the Situation had

deteriorated

since Myrdal’s day, and despite what had been achieved by the civil rights movement. Dip into Hacker’s book at almost any page, and his results are disturbing.

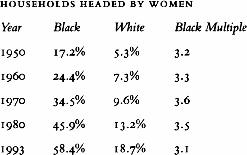

In other words, the situation in 1993 was, relatively speaking, no better than in 1950.

35

‘The real problem in our time,’ wrote Hacker, ‘is that more and more black infants are being born to mothers who are immature and poor. Compared with white women – most of whom are older and more comfortably off” – black women are twice as likely to have anemic conditions during pregnancy, twice as likely to have no prenatal care, and twice as likely to give birth to low-weight babies. Twice as many of their children develop serious health problems, including asthma, deafness, retardation, and learning disabilities, as well as conditions stemming from their own use of drugs and alcohol during pregnancy.’

36

‘Measured in economic terms, the last two decades have not been auspicious ones for Americans of any race. Between 1970 and 1992, the median income for white families, computed in constant dollars, rose from $34,773 to $38,909, an increase of 11.9 percent. During this time black family income in fact went down a few dollars, from $21,330 to $21,161. In relative terms, black incomes fell from $613 to $544 for each $1,000 received by whites.’

37

Despite a large chapter on crime, Hacker’s figures on school desegregation were more startling. In the early 1990s, 63.2 percent of all black children, nearly two out of three, were still in segregated schools. In some states the percentage of blacks in segregated schools was as high as 84 percent. Hacker’s conclusion was sombre: ‘In allocating responsibility, the response should be clear. It is white America that has made being black so disconsolate an estate. Legal slavery may be in the past, but segregation and subordination have been allowed to persist. Even today, America imposes a stigma on every black child at birth…. A huge racial chasm remains, and there are few signs that the coming century

will see it closed. A century and a quarter after slavery, white America continues to ask of its black citizens an extra patience and perseverance that whites have never required of themselves. So the question for white Americans is essentially moral: is it right to impose on members of an entire race a lesser start in life and then to expect from them a degree of resolution that has never been demanded from your own race?

38

The oil crisis of 1973–74 surely proved Friedrich von Hayek and Milton Friedman right in at least one respect. Economic freedom, if not the most basic of freedoms, as Ronald Dworkin argues, is still pretty fundamental. Since the oil crisis, and the economic transformation it ignited, many areas of life in the West – politics, psychology, moral philosophy, and sociology – have been refashioned. The works of Galbraith, Sen, and Hacker, or more accurately the

failure

of these works to stimulate, say, the kind of popular (as opposed to academic) debate that Michael Harrington’s

Other America

provoked in the early 1960s, is perhaps the defining element of the current public mood. Individualism and individuality are now so prized that they have tipped over into selfishness. The middle classes are too busy doing well to do good.

39

THE WAGES OF REPRESSION

When Dr. Michael Gottlieb, from the University of California at Los Angeles, arrived in Washington in the second week of September 1981 for a conference at the National Institutes of Health (NIH), he was optimistic that the American medical authorities were finally taking seriously a new illness that, he feared, could soon reach epidemic proportions. The NIH is the world’s biggest and most powerful medical organisation. Housed on a campus of more than 300 acres, ten miles northwest of Washington, D.C. in the Bethesda hills, it had by the end of the century an annual budget of $13 billion and housed among other things the National Institute for Allergy and Infectious Diseases, the National Heart, Lung and Blood Institute, and the National Cancer Institute.

The conference Gottlieb was attending had been called at the NCI to explore the outbreak of a rash of cases of a rare skin cancer in the United States, known as

Kaposi’s sarcoma.

1

One of the other doctors at the conference was Linda Laubenstein, a blood specialist at New York University. She had first seen KS in a patient of hers in September 1979, when it had presented as a generalised rash on a man’s skin, associated with enlarged lymph nodes. At that point she had never heard of KS, and had looked it up after the cancer had been diagnosed by a dermatologist. This particular type of illness had originally been discovered in 1871 among Mediterranean and Jewish men, and in the century that followed, between 500 and 800 cases had been reported. It was also seen among the Bantu in Africa. It usually struck men in their forties and fifties and was generally benign; the lesions were painless, and the victims usually died much later of something else. But, as Laubenstein and Gottlieb now knew, KS in America was much more vicious: 120 cases had already been reported, often in association with a rare, parasitical form of pneumonia – Pneumocystis – and in 90 percent of cases the patients were gay men.

2

An added, and worrying, complication was that these patients also suffered from a strange deficiency to their immune system – the antibodies in their blood simply refused to fight the different infections that came along, so that the men died from whatever illnesses they contracted while their bodies were already weakened by cancer.

Gottlieb was astonished by the Bethesda conference. He had arrived amid rumours that the NIH was at last going to fund a research program into this new

disease. The Center for Disease Control, headquartered in Atlanta, Georgia, had been trying to trace where the outbreak had started and how it had spread, but the CDC was just the ‘shock troops’ in the fight against disease; it was now time for more fundamental research. So Gottlieb sat in quiet amazement as he and the others were lectured on KS and its treatment in Africa, as if there was no awareness at NIH that the disease had arrived in America, and in a far more virulent form than across the Atlantic. He left the meeting bewildered and depressed and returned to Los Angeles to work on a paper he was planning for the

New England Journal of Medicine

on the links between KS and

Pneumocystis carinii

that he was observing. But then he found that the

Journal

was not ‘overly enthusiastic’ about publishing his article, and kept sending it back for one amendment after another. All this prevarication raised in Gottlieb’s mind the feeling that, among the powers-that-be, in the medical world at least, the new outbreak of disease was getting less attention than he thought it deserved, and that this was so because the great preponderance of victims were homosexual.

3

It would be another year before this set of symptons acquired a name. First it was GRID, standing for Gay-Related Immune Deficiency, then ACIDS, for Acquired Community Immune Deficiency Syndrome, and finally, in mid- 1982, AIDS, for Acquired Immune Deficiency Syndrome. The right name for the disease was the least of the problems. In March of the following year the

New York Native,

a Manhattan gay newspaper, ran the headline,

‘1

,112

AND COUNTING.’

That was the number of homosexual men who had died from the disease.

4

But AIDS was significant for two reasons over and above the sheer numbers it cut down, tragic though that was. It was important because it encompassed the two great strands of research that, apart from psychiatric drugs, had dominated medical thinking in the postwar period; and, second, because the people who were cut down by AIDS were, disproportionately, involved in artistic and intellectual life.

The two strands of inquiry dominating medical thought after 1945 were the biochemistry of the immunological system, and the nature of cancer. After the first reports in the early 1950s about the links between smoking and cancer, it was soon observed that there was almost as intimate a link between smoking and heart disease. Coronary thrombosis – heart attack – was found to be much more common in smokers than in nonsmokers, especially among men, and this provoked two approaches in medical research. In heart disease the crucial factor was blood pressure, and this deviated from the norm mainly due to two reasons. Insofar as smoking damaged the lungs, and made them less efficient at absorbing oxygen from the air, each breath sent correspondingly less oxygen into the body’s system, causing the heart to work harder to achieve the same effect. Over time this imposed an added burden on the muscle of the heart, which eventually gave out. In such cases blood pressure was low, but high blood pressure was a problem also, this time because it was found that foods high in animal fats caused deposits of cholesterol to be laid down in the blood vessels, narrowing them and, in extreme cases, blocking them entirely. This also put pressure on the heart, and on the blood vessels themselves, because the same volume of blood was being compressed through less space. In extremes this

could damage the muscle of the heart and/or rupture the walls of the blood vessels, including those of the brain, in a cerebral haemorrhage, or stroke. Doctors responded by trying to devise drugs that either raised or lowered blood pressure, in part by ‘thinning’ the blood and, where the heart had been irreparably damaged, replacing it in its entirety.

Before World War II there were in effect no drugs that would lower blood pressure. By 1970 there were no fewer than four families of drugs in wide use, of which the best-known were the so-called beta-blockers. These drugs grew out of a line of research that dated back to the 1930s, in which it had been found that acetylcholine, the transmitter substance that played a part in nerve impulses (see chapter 28, page 501), also exerted an influence on nervous structures that governed the heart and the blood vessels.

5

In the nerve pathways that eventually lead to the coronary system, a substance similar to adrenaline was released, and it was this which controlled the action of the heart and blood vessels. So began a search for some way of interfering with – blocking – this action. In 1948 Raymond Ahlquist, at the University of Georgia, found that the nerves involved in this mechanism consisted of two types, which he arbitrarily named alpha and beta, because they responded to different substances. The beta receptors, as Ahlquist called them, stimulated both the rate and the force of the heartbeat, which gave James Black, a British doctor, the idea to see if blocking the action of adrenaline might help reduce activity.

6

The first substance he identified, promethalol, was effective but was soon shown to produce tumours in mice and was withdrawn. Its replacement, propranolol had no such drawbacks and became the first of many ‘beta-blockers.’ They were in fact subsequently found to have a wide range of uses: besides lowering blood pressure, they prevented heart irregularities and helped patients survive after a heart attack.

7

Heart transplants were a more radical form of intervention for heart disease, but as doctors watched developments in molecular biology, the option grew more attractive as it was realised that, at some point, cloning might become a possibility. The central intellectual problem with transplants, apart from the difficult surgery involved and the ethical problems of obtaining donor organs from newly deceased individuals, was immunological: the organs were in effect foreign bodies introduced into a person’s physiological system, and therefore rejected as an intruder.

The research on immunosuppressants grew out of cancer research, in particular leukaemia, which is a tumour of the lymphocytes, the white blood cells that rapidly reproduce to fight off foreign bodies in disease.

8

After the war, and even before the structure of DNA had been identified, its role in reproduction suggested it might have a role in cancer research (cancer itself being the rapid reproduction of malignant cells). Early studies showed that a particular type of purine (such as adenine and guanine) and pyridamines (cytosine and thymine) did affect the growth of cells. In 1951 a substance known as 6-Mercaptopurine (6-MP) was found to cause remission for a time in certain leukemias. The good news never lasted, but the action of 6-MP was potent enough for its role in immunosuppression to be tested. The crucial experiments were carried out in

the late 1950s at the New England Medical Center, where Robert Schwartz and William Dameshek decided to try two drugs used for leukaemia – methotrexate and 6-MP – on the immune response of rabbits. As Miles Weatherall tells the story in his history of modern medicine, this major breakthrough turned on chance. Schwartz wrote to Lederle Laboratories for samples of methotrexate, and to Burroughs Wellcome for 6-MP.

9

He never heard from Lederle, but Burroughs Wellcome sent him generous amounts of 6-MP. He therefore went ahead with this and found within weeks that it was indeed a powerful suppresser of the immune response. It was subsequently found that methotrexate had no effect on rabbits, so as Schwartz himself remarked, if the response of the two companies had been reversed, this particular avenue of inquiry would have been a dry run, and the great breakthrough would never have happened.

10

Dr. Christian Barnard, in South Africa, performed the world’s first heart transplant between humans in December 1967, with the patient surviving for eighteen days; a year later, in Barnard’s second heart-transplant operation, the patient lived for seventy-four days. A nerve transplant followed in Germany in 1970, and by 1978 immunosuppressant drugs were being sold commercially for use in transplant surgery. In 1984, at Loma Linda University Medical Center in California, the heart of a baboon was inserted into a two-week-old girl. She survived for only twenty days, but new prospects of ‘organ farming’ had been opened up.

11

By the time the AIDS epidemic appeared, therefore, a lot was already known about the human body’s immunological system, including a link between immune suppression and cancer. In 1978 Robert Gallo, a research doctor at the National Cancer Institute in Bethesda, discovered a new form of virus, known as a retrovirus, that caused leukaemia.

12

He had been looking at viruses because by then it was known that leukaemia in cats – feline leukaemia, a major cause of death in cats – was caused by a virus that knocked out the cats’ immune system. Japanese researchers had studied T cell leukaemia (T cells being the recently discovered white blood cells that are the key components of the immune system) but it was Gallo who identified the human T cell leukaemia virus, or HTLV, a major practical and theoretical breakthrough. Following this approach, in February 1983, Professor Luc Montagnier, at the Pasteur Institute in Paris, announced that he was sure he had discovered a new virus that was cytopathic, meaning it killed certain kinds of cell, including T lymphocytes. It operated like the feline leukaemia virus, which caused cancer but also knocked out the immune system – exactly the way AIDS behaved. Montagnier did not feel that ‘his’ virus was the leukaemia virus – it behaved in somewhat different ways and therefore had different genetic properties. This view strengthened when he heard from a colleague about a certain category of virus, known as lentiviruses, based on the Latin

lentus,

‘slow’.

13

Lentiviruses lie dormant in cells before bursting into action. That is what seemed to happen with the AIDS virus, unlike the leukaemia virus. Montagnier therefore called the virus LAV, for lymphadenopathy-associated virus, because he had taken it from the lymph nodes of his patients.

14

*

Intellectually speaking, there are five strands to current cancer research.

15

Viruses are one; the others are the environment, genes, personality (reacting with the environment), and auto-immunology, the idea that the body contains the potential for cancerous growth but is prevented from doing so by the immune system until old age, when the auto-immune system breaks down. There is no question that isolated breakthroughs have been made in cancer research, as with Gallo’s viral discoveries and that of the link between tobacco and cancer, but the bleak truths about the disease were broadcast in 1993 by Harold Varmus, 1989 Nobel Prize winner in physiology and head of NIH in Washington, D.C., and Robert Weinberg of MIT in their book

Genes and the Biology of Cancer.

16

They concluded that tobacco accounts for 30 percent of all cancer deaths in the United States, diet a further 35 percent, and that no other factor accounts for more than 7 percent. Once the smoking-related tumours are subtracted from the overall figures, however, the incidence and death rates for the great majority of cancers have either remained level or declined.

17

Varmus and Weinberg therefore attach relatively little importance to the environment in causing cancer and concentrate instead on its biology – viruses and genes. The latest research shows that there are proto-oncogenes, mutations formed by viruses that bring about abnormal growth, and tumour-suppresser genes that, when missing, fail to prevent abnormal growth. Though this may represent an intellectual triumph of sorts, even Varmus and Weinberg admit that this has not yet been translated into effective treatment. In fact, ‘incidence and mortality have changed very little in the past few decades.’

18

This failure has become an intellectual issue in itself – the tendency for government and cancer institutes to say that cancer can be cured (which is true, up to a point), versus the independent voice of the medical journals, which underline from time to time that, with a few exceptions, incidence and survival rates have not changed, or that most of the improvements occurred years ago (also true).