The Future of the Mind (37 page)

Read The Future of the Mind Online

Authors: Michio Kaku

SILICON CONSCIOUSNESS

As we’ve seen, human consciousness is an imperfect patchwork of different abilities developed over millions of years of evolution. Given information about their physical and social world, robots may be able to create simulations similar (or in some respects, even superior) to ours, but silicon consciousness might differ from ours in two key areas: emotions and goals.

Historically, AI researchers ignored the problem of emotions, considering it a secondary issue. The goal was to create a robot that was logical and rational, not scatterbrained and impulsive. Hence, the science fiction of the 1950s and ’60s stressed robots (and humanoids like Spock on

Star Trek

) that had perfect, logical brains.

We saw with the uncanny valley that robots will have to look a certain way if they’re to enter our homes, but some people argue that robots must

also have emotions so that we can bond with, take care of, and interact productively with them. In other words, robots will need Level II consciousness. To accomplish this, robots will first have to recognize the full spectrum of human emotions. By analyzing subtle facial movements of the eyebrows, eyelids, lips, cheeks, etc., a robot will be able to identify the emotional state of a human, such as its owner. One institution that has excelled in creating robots that recognize and mimic emotion is the MIT Media Laboratory.

I have had the pleasure of visiting the laboratory, outside Boston, on several occasions, and it is like visiting a toy factory for grown-ups. Everywhere you look, you see futuristic, high-tech devices designed to make our lives more interesting, enjoyable, and convenient.

As I looked around the room, I saw many of the high-tech graphics that eventually found their way into Hollywood movies like

Minority Report

and

AI

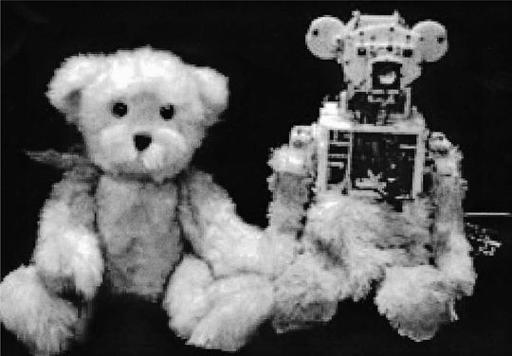

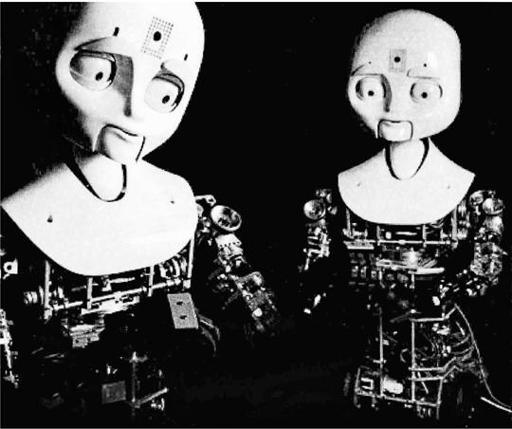

. As I wandered through this playground of the future, I came across two intriguing robots, Huggable and Nexi. Their creator, Dr. Cynthia Breazeal, explained to me that these robots have specific goals. Huggable is a cute teddy bear–like robot that can bond with children. It can identify the emotions of children; it has video cameras for eyes, a speaker for its mouth, and sensors in its skin (so it can tell when it is being tickled, poked, or hugged). Eventually, a robot like this might become a tutor, babysitter, nurse’s aide, or a playmate.

Nexi, on the other hand, can bond with adults. It looks a little like the Pillsbury Doughboy. It has a round, puffy, friendly face, with large eyes that can roll around. It has already been tested in a nursing home, and the elderly patients all loved it. Once the seniors got accustomed to Nexi, they would kiss it, talk to it, and miss it when it had to leave. (See

Figure 12

.)

Dr. Breazeal told me she designed Huggable and Nexi because she was not satisfied with earlier robots, which looked like tin cans full of wires, gears, and motors. In order to design a robot that could interact emotionally with people, she needed to figure out how she could get it to perform and bond like us. Plus, she wanted robots that weren’t stuck on a laboratory shelf but could venture out into the real world. The former director of MIT’s Media Lab, Dr. Frank Moss, says, “

That is why Breazeal decided in 2004 that it was time to create a new generation of social robots that could live anywhere: homes, schools, hospitals, elder care facilities, and so on.”

At Waseda University in Japan, scientists are working on a robot that has

upper-body motions representing emotions (fear, anger, surprise, joy, disgust, sadness) and can hear, smell, see, and touch. It has been programmed to carry out simple goals, such as satisfying its hunger for energy and avoiding dangerous situations.

Their goal is to integrate the senses with the emotions, so that the robot acts appropriately in different situations.

Figure 12

. Huggable (top) and Nexi (bottom), two robots built at the MIT Media Laboratory that were explicitly designed to interact with humans via emotions. (

illustration credit 10.1

)

Not to be outdone, the European Commission is funding an ongoing project, called Feelix Growing, which seeks to promote artificial intelligence in the UK, France, Switzerland, Greece, and Denmark.

EMOTIONAL ROBOTS

Meet Nao.

When he’s happy, he will stretch out his arms to greet you, wanting a big hug. When he’s sad, he turns his head downward and appears forlorn, with his shoulders hunched forward. When he’s scared, he cowers in fear, until someone pats him reassuringly on the head.

He’s just like a one-year-old boy, except that he’s a robot. Nao is about one and a half feet tall, and looks very much like some of the robots you see in a toy store, like the Tranformers, except he’s one of the most advanced emotional robots on earth. He was built by scientists at the UK’s University of Hertfordshire, whose research was funded by the European Union.

His creators have programmed him to show emotions like happiness, sadness, fear, excitement, and pride. While other robots have rudimentary facial and verbal gestures that communicate their emotions, Nao excels in body language, such as posture and gesture. Nao even dances.

Unlike other robots, which specialize in mastering just one area of the emotions, Nao has mastered a wide range of emotional responses. First, Nao locks onto visitors’ faces, identifies them, and remembers his previous interactions with each of them. Second, he begins to follow their movements. For example, he can follow their gaze and tell what they are looking at. Third, he begins to bond with them and learns to respond to their gestures. For example, if you smile at him, or pat him on his head, he knows that this is a positive sign. Because his brain has neural networks, he learns from interactions with humans. Fourth, Nao exhibits emotions in response to his interactions with people. (His emotional responses are all preprogrammed, like a tape recorder, but he decides which emotion to choose to fit the situation.)

And lastly, the more Nao interacts with a human, the better he gets at understanding the moods of that person and the stronger the bond becomes.

Not only does Nao have a personality, he can actually have several of them. Because he learns from his interactions with humans and each interaction is unique, eventually different personalities begin to emerge. For example, one personality might be quite independent, not requiring much human guidance. Another personality might be timid and fearful, scared of objects in a room, constantly requiring human intervention.

The project leader for Nao is Dr. Lola Cañamero, a computer scientist at the University of Hertfordshire. To start this ambitious project, she analyzed the interactions of chimpanzees. Her goal was to reproduce, as closely as she could, the emotional behavior of a one-year-old chimpanzee.

She sees immediate applications for these emotional robots. Like Dr. Breazeal, she wants to use these robots to relieve the anxiety of young children who are in hospitals. She says, “We want to explore different roles—the robots will help the children to understand their treatment, explain what they have to do. We want to help the children to control their anxiety.”

Another possibility is that the robots will become companions at nursing homes. Nao could become a valuable addition to the staff of a hospital. At some point, robots like these might become playmates to children and a part of the family.

“

It’s hard to predict the future, but it won’t be too long before the computer in front of you will be a social robot. You’ll be able to talk to it, flirt with it, or even get angry and yell at it—and it will understand you and your emotions,” says Dr. Terrence Sejnowski of the Salk Institute, near San Diego. This is the easy part. The hard part is to gauge the response of the robot, given this information. If the owner is angry or displeased, the robot has to be able to factor this into its response.

EMOTIONS: DETERMINING WHAT IS IMPORTANT

What’s more, AI researchers have begun to realize that emotions may be a key to consciousness.

Neuroscientists like Dr. Antonio Damasio have found that when the link between the prefrontal lobe (which governs rational thought) and the emotional centers (e.g., the limbic system) is damaged, patients cannot

make value judgments. They are paralyzed when making the simplest of decisions (what things to buy, when to set an appointment, which color pen to use) because everything has the same value to them. Hence, emotions are not a luxury; they are absolutely essential, and without them a robot will have difficulty determining what is important and what is not. So emotions, instead of being peripheral to the progress of artificial intelligence, are now assuming central importance.

If a robot encounters a raging fire, it might rescue the computer files first, not the people, since its programming might say that valuable documents cannot be replaced but workers always can be. It is crucial that robots be programmed to distinguish between what is important and what is not, and emotions are shortcuts the brain uses to rapidly determine this. Robots would thus have to be programmed to have a value system—that human life is more important than material objects, that children should be rescued first in an emergency, that objects with a higher price are more valuable than objects with a lower price, etc. Since robots do not come equipped with values, a huge list of value judgments must be uploaded into them.

The problem with emotions, however, is that they are sometimes irrational, while robots are mathematically precise. So silicon consciousness may differ from human consciousness in key ways. For example, humans have little control over emotions, since they happen so rapidly and because they originate in the limbic system, not the prefrontal cortex of the brain. Furthermore, our emotions are often biased. Numerous tests have shown that we tend to overestimate the abilities of people who are handsome or pretty. Good-looking people tend to rise higher in society and have better jobs, although they may not be as talented as others. As the expression goes, “Beauty has its privileges.”

Similarly, silicon consciousness may not take into account subtle cues that humans use when they meet one another, such as body language. When people enter a room, young people usually defer to older ones and low-ranked staff members show extra courtesy to senior officials. We show our deference in the way we move our bodies, our choice of words, and our gestures. Because body language is older than language itself, it is hardwired into the brain in subtle ways. Robots, if they are to interact socially with people, will have to learn these unconscious cues.

Our consciousness is influenced by peculiarities in our evolutionary past,

which robots will not have, so silicon consciousness may not have the same gaps or quirks as ours.

A MENU OF EMOTIONS

Since emotions have to be programmed into robots from the outside, manufacturers may offer a menu of emotions carefully chosen on the basis of whether they are necessary, useful, or will increase bonding with the owner.

In all likelihood, robots will be programmed to have only a few human emotions, depending on the situation. Perhaps the emotion most valued by the robot’s owner will be loyalty. One wants a robot that faithfully carries out its commands without complaints, that understands the needs of the master and anticipates them. The last thing an owner will want is a robot with an attitude, one that talks back, criticizes people, and whines. Helpful criticisms are important, but they must be made in a constructive, tactful way. Also, if humans give it conflicting commands, the robot should know to ignore all of them except those coming from its owner.

Empathy will be another emotion that will be valued by the owner. Robots that have empathy will understand the problems of others and will come to their aid. By interpreting facial movements and listening to tone of voice, robots will be able to identify when a person is in distress and will provide assistance when possible.