The Information (61 page)

751…

The world’s computers have spent many cycles analyzing the first trillion or so known decimal digits of this cosmic message, and as far as anyone can tell, they appear normal. No statistical features have been discovered—no biases or correlations, local or remote. It is a quintessentially nonrandom number that seems to behave randomly. Given the

n

th digit, there is no shortcut for guessing the

n

th plus one. Once again, the next bit is always a surprise.

How much information, then, is represented by this string of digits? Is it information rich, like a random number? Or information poor, like an ordered sequence?

The telegraph operator could, of course, save many keystrokes—infinitely many, in the long run—by simply sending the message “Π.” But this is a cheat. It presumes knowledge previously shared by the sender and the receiver. The sender has to recognize this special sequence to begin with, and then the receiver has to know what Π is, and how to look up its decimal expansion, or else how to compute it. In effect, they need to share a code book.

This does not mean, however, that Π contains a lot of information. The essential message can be sent in fewer keystrokes. The telegraph operator has several strategies available. For example, he could say, “Take 4, subtract 4/3, add 4/5, subtract 4/7, and so on.” The telegraph operator

sends an algorithm, that is. This infinite series of fractions converges slowly upon Π, so the recipient has a lot of work to do, but the message itself is economical: the total information content is the same no matter how many decimal digits are required.

The issue of shared knowledge at the far ends of the line brings complications. Sometimes people like to frame this sort of problem—the problem of information content in messages—in terms of communicating with an alien life-form in a faraway galaxy. What could we tell them? What would we want to say? The laws of mathematics being universal, we tend to think that Π would be one message any intelligent race would recognize. Only, they could hardly be expected to know the Greek letter. Nor would they be likely to recognize the decimal digits “3.1415926535 …” unless they happened to have ten fingers.

The sender of a message can never fully know his recipient’s mental code book. Two lights in a window might mean nothing or might mean “The British come by sea.” Every poem is a message, different for every reader. There is a way to make the fuzziness of this line of thinking go away. Chaitin expressed it this way:

It is preferable to consider communication not with a distant friend but with a digital computer. The friend might have the wit to make inferences about numbers or to construct a series from partial information or from vague instructions. The computer does not have that capacity, and for our purposes that deficiency is an advantage. Instructions given the computer must be complete and explicit, and they must enable it to proceed step by step.

♦

In other words: the message is an algorithm. The recipient is a machine; it has no creativity, no uncertainty, and no knowledge, except whatever “knowledge” is inherent in the machine’s structure. By the 1960s, digital computers were already getting their instructions in a form measured in bits, so it was natural to think about how much information was contained in any algorithm.

A different sort of message would be this:

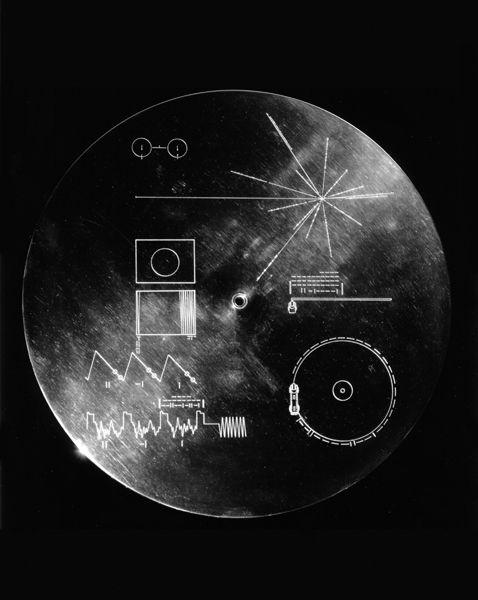

Even to the eye this sequence of notes seems nonrandom. It happens that the message they represent is already making its way through interstellar space, 10 billion miles from its origin, at a tiny fraction of light speed. The message is not encoded in this print-based notation, nor in any digital form, but as microscopic waves in a single long groove winding in a spiral engraved on a disc twelve inches in diameter and one-fiftieth of an inch in thickness. The disc might have been vinyl, but in this case it was copper, plated with gold. This analog means of capturing, preserving, and reproducing sound was invented in 1877 by Thomas Edison, who called it phonography. It remained the most popular audio technology a hundred years later—though not for much longer—and in 1977 a committee led by the astronomer Carl Sagan created a particular phonograph record and stowed copies in a pair of spacecraft named

Voyager 1

and

Voyager 2

, each the size of a small automobile, launched that summer from Cape Canaveral, Florida.

So it is a message in an interstellar bottle. The message has no meaning, apart from its patterns, which is to say that it is abstract art: the first prelude of Johann Sebastian Bach’s

Well-Tempered Clavier

, as played on the piano by Glenn Gould. More generally, perhaps the meaning is “There is intelligent life here.” Besides the Bach prelude, the record includes music samples from several different cultures and a selection of earthly sounds: wind, surf, and thunder; spoken greetings in fifty-five languages; the voices of crickets, frogs, and whales; a ship’s horn, the clatter of a horse-drawn cart, and a tapping in Morse code. Along with the phonograph record are a cartridge and needle and a brief pictographic instruction

manual. The committee did not bother with a phonograph player or a source of electrical power. Maybe the aliens will find a way to convert those analog metallic grooves into waves in whatever fluid serves as their atmosphere—or into some other suitable input for their alien senses.

Would they recognize the intricate patterned structure of the Bach prelude (say), as distinct from the less interesting, more random chatter of crickets? Would the sheet music convey a clearer message—the written notes containing, after all, the essence of Bach’s creation? And, more generally, what kind of knowledge would be needed at the far end of the line—what kind of code book—to decipher the message? An appreciation of counterpoint and voice leading? A sense of the tonal context and performance practices of the European Baroque? The sounds—the notes—come in groups; they form shapes, called melodies; they obey the rules of an implicit grammar. Does the music carry its own logic with it, independent of geography and history? On earth, meanwhile, within a few years, even before the

Voyagers

had sailed past the solar system’s edge, music was seldom recorded in analog form anymore. Better to store the sounds of the

Well-Tempered Clavier

as bits: the waveforms discretized without loss as per the Shannon sampling theorem, and the information preserved in dozens of plausible media.

In terms of bits, a Bach prelude might not seem to have much information at all. As penned by Bach on two manuscript pages, this one amounts to six hundred notes, characters in a small alphabet. As Glenn Gould played it on a piano in 1964—adding the performer’s layers of nuance and variation to the bare instructions—it lasts a minute and thirty-six seconds. The sound of that performance, recorded onto a CD,

microscopic pits burned by a laser onto a slim disc of polycarbonate plastic, comprises 135 million bits. But this bitstream can be compressed considerably with no loss of information. Alternatively, the prelude fits on a small player-piano roll (descendant of Jacquard’s loom, predecessor of punched-card computing); encoded electronically with the MIDI protocol, it uses a few thousands bits. Even the basic six-hundred-character message has tremendous redundancy: unvarying tempo, uniform timbre, just a brief melodic pattern, a word, repeated over and over with slight variations till the final bars. It is famously, deceptively simple. The very repetition creates expectations and breaks them. Hardly anything happens, and everything is a surprise. “Immortal broken chords of radiantly white harmonies,” said Wanda Landowska. It is simple the way a Rembrandt drawing is simple. It does a lot with a little. Is it then rich in information? Certain music could be considered information poor. At one extreme John Cage’s composition titled

4′33″

contains no “notes” at all: just four minutes and thirty-three seconds of near silence, as the

piece absorbs the ambient sounds around the still pianist—the listeners’ shifting in their seats, rustling clothes, breathing, sighing.

How much information in the Bach C-major Prelude? As a set of patterns, in time and frequency, it can be analyzed, traced, and understood, but only up to a point. In music, as in poetry, as in any art, perfect understanding is meant to remain elusive. If one could find the bottom it would be a bore.

In a way, then, the use of minimal program size to define complexity seems perfect—a fitting apogee for Shannon information theory. In another way it remains deeply unsatisfying. This is particularly so when turning to the big questions—one might say, the human questions—of art, of biology, of intelligence.

According to this measure, a million zeroes and a million coin tosses lie at opposite ends of the spectrum. The empty string is as simple as can be; the random string is maximally complex. The zeroes convey no information; coin tosses produce the most information possible. Yet these extremes have something in common. They are dull. They have no value. If either one were a message from another galaxy, we would attribute no intelligence to the sender. If they were music, they would be equally worthless.

Everything we care about lies somewhere in the middle, where pattern and randomness interlace.

Chaitin and a colleague, Charles H. Bennett, sometimes discussed these matters at IBM’s research center in Yorktown Heights, New York. Over a period of years, Bennett developed a new measure of value, which he called “logical depth.” Bennett’s idea of depth is connected to complexity but orthogonal to it. It is meant to capture the usefulness of a message, whatever usefulness might mean in any particular domain. “From the earliest days of information theory it has been appreciated that information per se is not a good measure of message value,”

♦

he wrote, finally publishing his scheme in 1988.

A typical sequence of coin tosses has high information content but little value; an ephemeris, giving the positions of the moon and planets every day for a hundred years, has no more information than the equations of motion and initial conditions from which it was calculated, but saves its owner the effort of recalculating these positions.

The amount of work it takes to compute something had been mostly disregarded—set aside—in all the theorizing based on Turing machines, which work, after all, so ploddingly. Bennett brought it back. There is no logical depth in the parts of a message that are sheer randomness and unpredictability, nor is there logical depth in obvious redundancy—plain repetition and copying. Rather, he proposed, the value of a message lies in “what might be called its buried redundancy—parts predictable only with difficulty, things the receiver could in principle have figured out without being told, but only at considerable cost in money, time, or computation.” When we value an object’s complexity, or its information content, we are sensing a lengthy hidden computation. This might be true of music or a poem or a scientific theory or a crossword puzzle, which gives its solver pleasure when it is neither too cryptic nor too shallow, but somewhere in between.