Antifragile: Things That Gain from Disorder (77 page)

Read Antifragile: Things That Gain from Disorder Online

Authors: Nassim Nicholas Taleb

Third, in African countries, government officials get explicit bribes. In the United States they have the implicit, never mentioned, promise to go work for a bank at a later date with a sinecure offering, say $5 million a year, if they are seen favorably by the industry. And the “regulations” of such activities are easily skirted.

What upset me the most about the Alan Blinder problem is the reactions by those with whom I discussed it: people found it natural that a former official would try to “make money” thanks to his former position—at our expense.

Don’t people like to make money?

goes the argument.

You can always find an argument or an ethical reason to defend an opinion ex post. This is a dicey point, but, as with cherry-picking, one should propose an ethical rule before an action, not after. You want to prevent fitting a narrative to what you are doing—and for a long time “casuistry,” the art of arguing the nuances of decisions, was just that, fitting narratives.

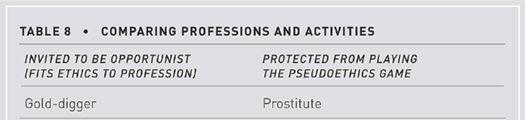

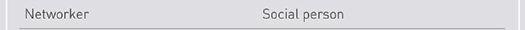

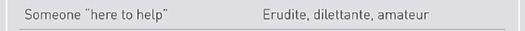

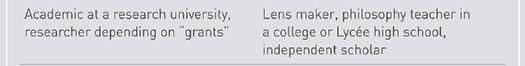

Let me first define a fraudulent opinion. It is simply one with vested interests generalized to the public good—in which, say a hairdresser recommends haircuts “for the health of people,” or a gun lobbyist claims gun ownership is “good for America,” simply making statements that benefit him personally, while the statements are dressed up to look as if they were made for the benefit of the collective. In other words, is he in the left column of

Table 7

? Likewise, Alan Blinder wrote that he opposed generalized deposit insurance, not because his company would lose business, but

because of the public good

.

But the heuristic is easy to implement, with a simple question. I was in Cyprus at a conference dinner in which another speaker, a Cypriot

professor of petrochemical engineering in an American university, was ranting against the climate activist Lord Nicholas Stern. Stern was part of the conference but absent from the dinner. The Cypriot was extremely animated. I had no idea what the issues were, but saw the notion of “absence of evidence” mixed with “evidence of absence” and pounced on him in defense of Stern, whom I had never met. The petrochemical engineer was saying that we had

no evidence

that fossil fuels caused harm to the planet, turning his point semantically into something equivalent in decision making to the statement that that we had

evidence that fossil fuels did not harm

. He made the mistake of saying that Stern was recommending useless insurance, causing me to jump to ask him if he had car, health, and other insurance for events that did not take place, that sort of argument. I started bringing up the idea that we are doing something new to the planet, that the burden of evidence is on those who disturb natural systems, that Mother Nature knows more than he will ever know, not the other way around. But it was like talking to a defense lawyer—sophistry, and absence of convergence to truth.

Then a heuristic came to mind. I surreptitiously asked a host sitting next to me if the fellow had anything to gain from his argument: it turned out that he was deep into oil companies, as an advisor, an investor, and a consultant. I immediately lost interest in what he had to say and the energy to debate him in front of others—his words were nugatory, just babble.

Note how this fits into the idea of skin in the game. If someone has an opinion, like, say, the banking system is fragile and should collapse, I want him invested in it so he is harmed if the audience for his opinion are harmed—as a token that he is not an empty suit. But when general statements about the collective welfare are made, instead,

absence

of investment is what is required.

Via negativa.

I have just presented the mechanism of ethical optionality by which

people fit their beliefs to actions rather than fit their actions to their beliefs

.

Table 8

compares professions with respect to such ethical backfitting.

There exists an inverse Alan Blinder problem, called “evidence against one’s interest.” One should give more weight to witnesses and opinions when they present the opposite of a conflict of interest. A pharmacist or an executive of Big Pharma who advocates starvation and

via negativa

methods to cure diabetes would be more credible than another one who favors the ingestion of drugs.

This is a bit technical, so the reader can skip this section with no loss. But optionality is everywhere, and here is a place to discuss a version of cherry-picking that destroys the entire spirit of research and makes the abundance of data extremely harmful to knowledge. More data means more information, perhaps, but it also means more false information. We are discovering that fewer and fewer papers replicate—textbooks in, say, psychology need to be revised. As to economics, fuhgetaboudit. You can hardly trust many statistically oriented sciences—especially when the researcher is under pressure to publish for his career. Yet the claim will be “to advance knowledge.”

Recall the notion of epiphenomenon as a distinction between real life and libraries. Someone looking at history from the vantage point of a library will necessarily find many more spurious relationships than

one who sees matters in the making, in the usual sequences one observes in real life. He will be duped by more epiphenomena, one of which is the direct result of the excess of data as compared to real signals.

We discussed the rise of noise in

Chapter 7

. Here it becomes a worse problem, because there is an optionality on the part of the researcher, no different from that of a banker. The researcher gets the upside, truth gets the downside. The researcher’s free option is in his ability to pick whatever statistics can confirm his belief—or show a good result—and ditch the rest. He has the

option

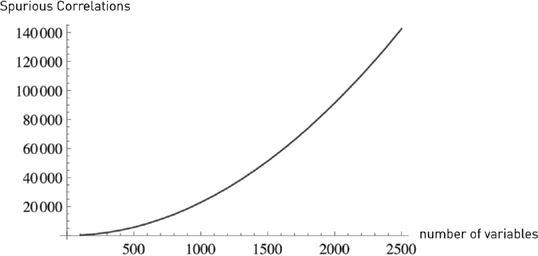

to stop once he has the right result. But beyond that, he can find statistical relationships—the spurious rises to the surface. There is a certain property of data: in large data sets, large deviations are vastly more attributable to noise (or variance) than to information (or signal).

1

FIGURE 18.

The Tragedy of Big Data. The more variables, the more correlations that can show significance in the hands of a “skilled” researcher. Falsity grows faster than information; it is nonlinear (convex) with respect to data.