In the Beginning Was Information (6 page)

Read In the Beginning Was Information Online

Authors: Werner Gitt

Tags: #RELIGION / Religion & Science, #SCIENCE / Study & Teaching

Limit theorems:

Limit theorems describe boundaries that cannot be overstepped. In 1927, the German physicist Werner Heisenberg (1901–1976) published such a theorem, namely the so-called uncertainty principle of Heisenberg. According to this principle, it is impossible to determine both the position and the velocity of a particle exactly at a prescribed moment. The product of the two uncertainties is always greater than a specific natural constant, which would have been impossible if the uncertainties were vanishingly small. It follows, for example, that certain measurements can never be absolutely exact. This finding resulted in the collapse of the structure of the then current 19th century deterministic philosophy. The affirmations of the laws of nature are so powerful that viewpoints which were held up to the time they are formulated, may be rapidly discarded.

Information theorems:

In conclusion, we mention that there is a series of theorems which should also be regarded as laws of nature, although they are not of a physical or a chemical nature. These laws will be discussed fully in this book, and all the previously mentioned criteria, N1 to N9, as well as the relevance statements R1 to R6, are also valid in their case.

2.6 Possible and Impossible Events

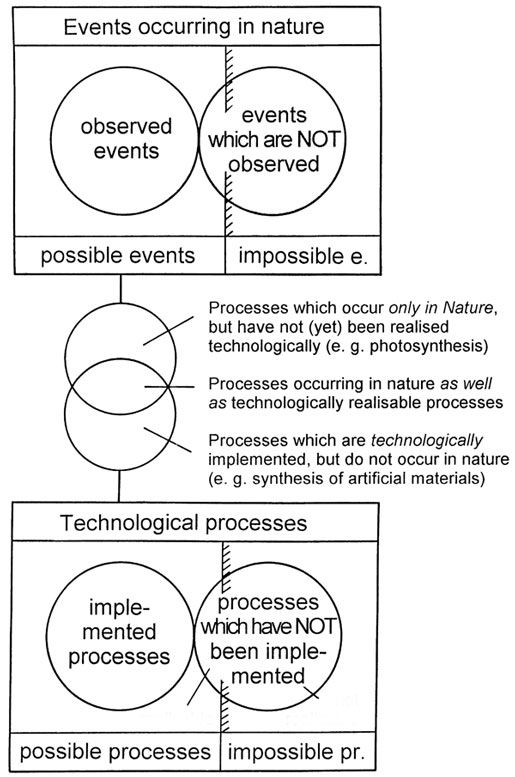

The totality of all imaginable events and processes can be divided into two groups as in Figure 7, namely,

a) possible events

b) impossible events.

Possible events occur under the "supervision" of the laws of nature, but it is in general not possible to describe all of them completely. On the other hand, impossible events could be identified by means of the so-called impossibility theorems.

Impossible events can be divided into two groups, those which are "fundamentally impossible," and those which are "statistically impossible." Events which contradict, for example, the energy law, are impossible in principle, because this theorem even holds for individual atoms. On the other hand, radioactive decay is a statistical law which is subject to the probability theorems, and cannot be applied to individual atoms, but in all practical cases, the number of atoms is so immense that an "exact" formulation can be used, namely n(t) = n

0

x e

-k x t

. The decay constant k does not depend on temperature, nor on pressure, nor on any possible chemical bond. The half-life T is given by the formula T = ln 2/k; this indicates the time required for any given quantity n

0

to diminish to half as much, n

0

/2. Since we are dealing with statistical events, one might expect that less than half the number of atoms or appreciably more then half could have decayed at time T. However, the probability of deviation from this law is so close to zero that we could regard it as statistically impossible. It should be clear that impossible events are neither observable nor recognizable nor measurable. Possible events have in general either been observed, or they are observable. However, there are other possible events about which it can be said that they

– cannot or cannot yet be observed (e.g., processes taking place in the sun’s interior)

– are in principle observable, but have never been observed

Thus far, we have only discussed natural events, but now we can apply these concepts to technological processes (in the widest sense of the word, comprising everything that can be made by human beings). The following categories are now apparent:

1. possible processes

1.1 already implemented

1.2 not yet implemented, but realizable in principle

2. impossible processes: proposed processes of this kind are fundamentally unrealizable, because they are precluded by laws of nature.

The distinctions illustrated in Figure 7 follow from a comparison of possible events in nature and in technology, namely:

a) processes which occur only in nature, but have not (yet) been realized technologically (e.g., photosynthesis, the storage of information on DNA molecules, and life functions);

b) processes occurring in nature which are also technologically realizable (e.g., industrial synthesis of organic substances);

c) processes which have been technologically implemented, but do not occur in nature (e.g., synthesis of artificial materials).

|

Figure 7: |

Part 2

Information

|

Chapter 3

Information Is a Fundamental Entity

3.1 Information: A Fundamental Quantity

The trail-blazing discoveries about the nature of energy in the 19th century caused the first technological revolution, when manual labor was replaced on a large scale by technological appliances — machines which could convert energy. In the same way, knowledge concerning the nature of information in our time initiated the second technological revolution where mental "labor" is saved through the use of technological appliances — namely, data processing machines. The concept "information" is not only of prime importance for informatics theories and communication techniques, but it is a fundamental quantity in such wide-ranging sciences as cybernetics, linguistics, biology, history, and theology. Many scientists therefore justly regard information as the third fundamental entity alongside matter and energy.

Claude E. Shannon was the first researcher who tried to define information mathematically. The theory based on his findings had the advantages that different methods of communication could be compared and that their performance could be evaluated. In addition, the introduction of the bit as a unit of information made it possible to describe the storage requirements of information quantitatively. The main disadvantage of Shannon’s definition of information is that the actual contents and impact of messages were not investigated. Shannon’s theory of information, which describes information from a statistical viewpoint only, is discussed fully in the appendix (chapter A1).

The true nature of information will be discussed in detail in the following chapters, and statements will be made about information and the laws of nature. After a thorough analysis of the information concept, it will be shown that the fundamental theorems can be applied to all technological and biological systems and also to all communication systems, including such diverse forms as the gyrations of bees and the message of the Bible. There is only one prerequisite — namely, that the information must be in coded form.

Since the concept of information is so complex that it cannot be defined in one statement (see Figure 12), we will proceed as follows: We will formulate various special theorems which will gradually reveal more information about the "nature" of information, until we eventually arrive at a precise definition (compare chapter 5). Any repetitions found in the contents of some theorems (redundance) is intentional, and the possibility of having various different formulations according to theorem N8 (paragraph 2.3), is also employed.

3.2 Information: A Material or a Mental Quantity?

We have indicated that Shannon’s definition of information encompasses only a very minor aspect of information. Several authors have repeatedly pointed out this defect, as the following quotations show:

Karl Steinbuch, a German information scientist [S11]: "The classical theory of information can be compared to the statement that one kilogram of gold has the same value as one kilogram of sand."

Warren Weaver, an American information scientist [S7]: "Two messages, one of which is heavily loaded with meaning and the other which is pure nonsense, can be exactly equivalent …as regards information."

Ernst von Weizsäcker [W3]: "The reason for the 'uselessness’ of Shannon’s theory in the different sciences is frankly that no science can limit itself to its syntactic level."

[6]

The essential aspect of each and every piece of information is its mental content, and not the number of letters used. If one disregards the contents, then Jean Cocteau’s facetious remark is relevant: "The greatest literary work of art is basically nothing but a scrambled alphabet."

At this stage we want to point out a fundamental fallacy that has already caused many misunderstandings and has led to seriously erroneous conclusions, namely the assumption that information is a material phenomenon. The philosophy of materialism is fundamentally predisposed to relegate information to the material domain, as is apparent from philosophical articles emanating from the former DDR (East Germany) [S8 for example]. Even so, the former East German scientist J. Peil [P2] writes: "Even the biology based on a materialistic philosophy, which discarded all vitalistic and metaphysical components, did not readily accept the reduction of biology to physics…. Information is neither a physical nor a chemical principle like energy and matter, even though the latter are required as carriers."

Also, according to a frequently quoted statement by the American mathematician Norbert Wiener (1894–1964) information cannot be a physical entity [W5]: "Information is information, neither matter nor energy. Any materialism which disregards this, will not survive one day."

Werner Strombach, a German information scientist of Dortmund [S12], emphasizes the nonmaterial nature of information by defining it as an "enfolding of order at the level of contemplative cognition."

The German biologist G. Osche [O3] sketches the unsuitability of Shannon’s theory from a biological viewpoint, and also emphasizes the nonmaterial nature of information: "While matter and energy are the concerns of physics, the description of biological phenomena typically involves information in a functional capacity. In cybernetics, the general information concept quantitatively expresses the information content of a given set of symbols by employing the probability distribution of all possible permutations of the symbols. But the information content of biological systems (genetic information) is concerned with its 'value’ and its 'functional meaning,’ and thus with the semantic aspect of information, with its quality."

Hans-Joachim Flechtner, a German cyberneticist, referred to the fact that information is of a mental nature, both because of its contents and because of the encoding process. This aspect is, however, frequently underrated [F3]: "When a message is composed, it involves the coding of its mental content, but the message itself is not concerned about whether the contents are important or unimportant, valuable, useful, or meaningless. Only the recipient can evaluate the message after decoding it."

3.3 Information: Not a Property of Matter!

It should now be clear that information, being a fundamental entity, cannot be a property of matter, and its origin cannot be explained in terms of material processes. We therefore formulate the following fundamental theorem:

Theorem 1: The fundamental quantity information is a non-material (mental) entity. It is not a property of matter, so that purely material processes are fundamentally precluded as sources of information.

Figure 8 illustrates the known fundamental entities — mass, energy, and information. Mass and energy are undoubtedly of a material-physical nature, and for both of them important conservation laws play a significant role in physics and chemistry and in all derived applied sciences. Mass and energy are linked by means of Einstein’s equivalence formula, E = m x c

2

. In the left part of Figure 8, some of the many chemical and physical properties of matter in all its forms are illustrated, together with the defined units. The right hand part of Figure 8 illustrates nonmaterial properties and quantities, where information, I, belongs.