The Design of Future Things (18 page)

Read The Design of Future Things Online

Authors: Don Norman

When things go wrong, or when we wish to change the usual operation for a special occasion, we need feedback to instruct us how to do it. Then, we need feedback for reassurance that our request is being performed as we wished, and some indication

about what will happen next: does the system revert to its normal mode, or is it now forever in the special mode? So, feedback is important for the following reasons:

â¢

reassurance

â¢

progress

reports and time estimates

â¢

learning

â¢

special

circumstances

â¢

confirmation

â¢

governing

expectations

Today, many automatic devices do provide minimal feedback, but much of the time it is through bleeps and burps, ring tones and flashing lights. This feedback is more annoying than informing, and even when it does inform, it provides partial information at best. In commercial settings, such as manufacturing plants, electric generating plants, hospital operating rooms, or inside the cockpits of aircraft, when problems arise, many different monitoring systems and pieces of equipment sound alarms. The resulting cacophony can be so disturbing that the people involved may waste precious time turning all the alarms off so that they can concentrate on fixing the problems.

As we move toward an increasing number of intelligent, autonomous devices in our environment, we also need to transition toward a more supportive form of two-way interaction. People need information that facilitates discovery of the situation and that guides them in deciding how to respond or, for that matter, reassures them that no action is required. The interaction has to be continuous, yet nonintrusive, demanding little

or no attention in most cases, requiring attention only when it is truly appropriate. Much of the time, especially when everything is working as planned, people only need to be kept in the loop, continually aware of the current state and of any possible problems ahead. Beeps won't work. Neither will spoken language. It has to be effective, yet in the periphery, so that it won't disturb other activities.

Ourselves?

In

The Design of Everyday Things

, I show that when people have difficulties with technology, invariably the technology or the design is at fault. “Don't blame yourself,” I explain to my readers. “Blame the technology.” Usually this is correct, but not always. Sometimes it is better when people blame themselves for a failure. Why? Because if it is the fault of the design or the technology, one can do nothing except become frustrated and complain. If it is a person's fault, perhaps the person can change and learn to work the technology. Where might this be true? Let me tell you about the Apple Newton.

In 1993, I left the comfortable life of academia and joined Apple Computer. My baptism into the world of commerce was rapid and roughâfrom being on the study team to determine whether and how AT&T should purchase Apple Computer, or at the very least form a joint venture, to watching over the launch of the Apple Newton. Both ventures failed, but the Newton failure was the more instructive case.

Ah, the Newton. A brilliant idea, introduced with great hoopla and panache. The Newton was the first sensible personal

data assistant. It was a small device by the standards of the time, portable, and controlled solely by handwriting on a touch-sensitive screen. The story of the Newton is complexâbooks have been written about itâbut here let me talk about one of its most deadly sins: the Newton's handwriting recognition system.

The Newton claimed to be able to interpret handwriting, transforming it into printed text. Great, except that this was back in 1993, and up to that time, there were no successful handwriting recognition systems. Handwriting recognition poses a very difficult technical challenge, and even today there are no completely successful systems. The Newton system was developed by a group of Russian scientists and programmers in a small company, Paragraph International. The system was technically sophisticated, but it flunked my rule of human-machine interaction, which is to be intelligible.

The system first performed a mathematical transformation on the handwriting strokes of each word into a location within an abstract, mathematical space of many dimensions, then matched what the user had written to its database of English words, picking the word that was closest in distance within this abstract space. If this sentence confuses you about how Newton recognized words, then you understand properly. Even sophisticated users of Newton could not explain the kinds of errors that it made. When the word recognition system worked, it was very, very good, and when it failed, it was horrid. The problem was caused by the great difference between the sophisticated mathematical multidimensional space that it was using and a person's perceptual judgments. There seemed to be no actual relationship between what was written and what the system produced. In fact, there was a relationship, but it was in the

realm of sophisticated mathematics, invisible to the person who was trying to make sense of its operation.

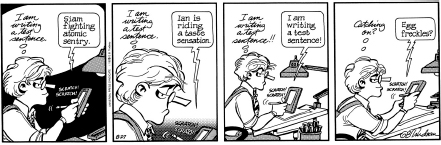

The Newton was released with great fanfare. People lined up for hours to be among the first to get one. Its ability to recognize handwriting was touted as a great innovation. In fact, it failed miserably, providing rich fodder for cartoonist Garry Trudeau, an early adopter. He used his comic strip,

Doonesbury

, to poke fun at the Newton.

Figure 6.1

shows the best-known example, a strip that detractors and fans of the Newton labeled “Egg Freckles.” I don't know if writing the words “Catching on?” would really turn into “Egg freckles?” but given the bizarre output that the Newton often produced, it's entirely possible.

F

IGURE

6.1

Garry Trudeau's

Doonesbury

strip “Egg Freckles,” widely credited with dooming the success of Apple Computer's Newton. The ridicule was deserved, but the public forum of this popular comic strip was devastating. The real cause? Completely unintelligible feedback. Doonesbury © 1993 G. B. Trudeau.

(Reprinted with permission of Universal Press Syndicate

All rights reserved)

The point of this discussion is not to ridicule the Newton but rather to learn from its shortcomings. The lesson is about human-machine communication: always make sure a system's response is understandable and interpretable. If it isn't what the

person expected, there should be an obvious action the person can take to get to the desired response.

Several years after “Egg Freckles,” Larry Yeager, working in Apple's Advanced Technology Group, developed a far superior method for recognizing handwriting. Much more importantly, however, the new system, called “Rosetta,” overcame the deadly flaw of the Paragraph system: errors could now be understood. Write “hand” and the system might recognize “nand”: people found this acceptable because the system got most of the letters right, and the one it missed, “h,” does look like an “n.” If you write “Catching on?” and get “Egg freckles?” you blame the Newton, deriding it as “a stupid machine.” But if you write “hand” and get “nand,” you blame yourself: “Oh, I see,” you might say to yourself, “I didn't make the first line on the âh' high enough so it thought it was an ân'.”

Notice how the conceptual model completely reverses the notion of where blame is to be placed. Conventional wisdom among human-centered designers is that if a device fails to deliver the expected results, it is the device or its design that should be blamed. When the machine fails to recognize handwriting, especially when the reason for the failure is obscure, people blame the machine and become frustrated and angry. With Rosetta, however, the situation is completely reversed: people are quite happy to place the blame on themselves if it appears that they did something wrong, especially when what they are required to do appears reasonable. Rather than becoming frustrated, they simply resolve to be more careful next time.

This is what really killed the Newton: people blamed it for its failure to recognize their handwriting. By the time Apple released a sensible, successful handwriting recognizer, it was too

late. It was not possible to overcome the earlier negative reaction and scorn. Had the Newton featured a less accurate, but more understandable, recognition system from the beginning, it might have succeeded. The early Newton is a good example of how any design unable to give meaningful feedback is doomed to failure in the marketplace.

When Palm released their personal digital assistant (initially called the “Palm Pilot”) in 1996, they used an artificial language, “Graffiti,” that required the user to learn a new way of writing. Graffiti used artificial letter shapes, similar to the normal printed alphabet, but structured so as to make the machine's task as easy as possible. The letters were similar enough to everyday printing that they could be learned without much effort. Graffiti didn't try to recognize whole words, it operated letter by letter, and so, when it made an error, it was only on a single letter, not the entire word. In addition, it was easy to find a reason for the error in recognition. These understandable, sensible errors made it easy for people to see what they might have done wrong and provided hints as to how to avoid the mistake the next time. The errors were actually reassuring, helping everyone develop a good mental model for how the recognition worked, helping people gain confidence, and helping them improve their handwriting. Newton failed; Palm succeeded.

Feedback is essential to the successful understanding of any system, essential for our ability to work in harmony with machines. Today, we rely too much on alarms and alerts that are too sudden, intrusive, and not very informative. Signals that simply beep, vibrate, or flash usually don't indicate what is wrong, only that something isn't right. By the time we have figured

out the problem, the opportunity to take corrective action may have passed. We need a more continuous, more natural way of staying informed of the events around us. Recall poor Prof. M: without feedback, he couldn't even figure out if his own system was working.

What are some ways to provide better methods of feedback? The foundation for the answer was laid in

chapter 3

, “Natural Interaction”: implicit communication, natural sounds and events, calm, sensible signals, and the exploitation of natural mappings between display devices and our interpretations of the world.

Watch someone helping a driver maneuver into a tight space. The helper may stand beside the car, visible to the driver, holding two hands apart to indicate the distance remaining between the car and the obstacle. As the car moves, the hands move closer together. The nice thing about this method of guidance is that it is natural: it does not have to be agreed upon beforehand; no instruction or explanation is needed.

Implicit signals can be intentional, either deliberately created by a person, as in the example above, or deliberately created in a machine by the designer. There are natural ways to communicate with people that convey precise information without words and with little or no training. Why not use these methods as a way of communicating between people and machines?

Many modern automobiles have parking assistance devices that indicate how close the auto is to the car ahead or behind. An indicator emits a series of beeps: beep (pause), beep (pause),

beep. As the car gets closer to the obstacle, the pauses get shorter, so the rate of beeping increases. When the beeps become continuous, it is time to stop: the car is about to hit the obstacle. As with the hand directions, this natural signal can be understood by a driver without instruction.

Natural signals, such as the clicks of the hard drive after a command or the familiar sound of water boiling in the kitchen, keep people informed about what is happening in the environment. These signals offer just enough information to provide feedback, but not enough to add to cognitive workload. Mark Weiser and John Seely Brown, two research scientists working at what was then the Xerox Corporation's Palo Alto Research Center, called this “calm technology,” which “engages both the center and the periphery of our attention, and in fact moves back and forth between the two.” The center is what we are attending to, the focal point of conscious attention. The periphery includes all that happens outside of central awareness, while still being noticeable and effective. In the words of Weiser and Brown:

Â

We use “periphery” to name what we are attuned to without attending to explicitly. Ordinarily when driving our attention is centered on the road, the radio, our passenger, but not the noise of the engine. But an unusual noise is noticed immediately, showing that we were attuned to the noise in the periphery, and could come quickly to attend to it. . . . A calm technology will move easily from the periphery of our attention, to the center, and back. This is fundamentally encalming, for two reasons.

First, by placing things in the periphery, we are able to attune to many more things than we could if everything had to be at the center. Things in the periphery are attuned to by the large portion of our brains devoted to peripheral (sensory) processing. Thus, the periphery is informing without overburdening.

Second, by recentering something formerly in the periphery, we take control of it.