Ancient DNA: Methods and Protocols (37 page)

Read Ancient DNA: Methods and Protocols Online

Authors: Beth Shapiro

22 Case Study: Enrichment of Ancient Mitochondrial DNA…

195

is able to facilitate large-scale studies on phylogeography and genetics of ancient populations. The utilized protocol allowed the custom design of bait molecules in a standard molecular lab setting.

This method is associated with lower costs than array capture and can be seen as an alternative when shorter loci, up to kilobases in length, are to be enriched from ancient DNA.

Acknowledgments

I would like to thank the Volkswagen foundation and Max Planck society for funding, M Meyer, the sequencing group and the bioinformatics group at the MPI EVA for their support in high-throughput sequencing, M Kircher for help in sequence analysis, and M Stiller for critical reading of this manuscript. C Schouwenburg, D Makowiecki, and T Kuznetsova provided the beaver samples

shown in Fig. 1 .

References

1. Rasmussen M et al (2010) Ancient human 8. Kircher M, Stenzel U, Kelso J (2009) Improved genome sequence of an extinct Palaeo-Eskimo.

base calling for the Illumina Genome Analyzer

Nature 463(7282):757–762

using machine learning strategies. Genome

2. Green RE et al (2006) Analysis of one million

Biol 10(8):R83

base pairs of Neanderthal DNA. Nature 9. Li H, Durbin R (2009) Fast and accurate 444(7117):330–336

short read alignment with Burrows—

3. Reich D et al (2010) Genetic history of an

Wheeler transform. Bioinformatics 25(14):

archaic hominin group from Denisova Cave in

1754–1760

Siberia. Nature 468(7327):1053–1060

10. Hall TA (1999) BioEdit: a user-friendly bio—

4. Durka W et al (2005) Mitochondrial phylo—

logical sequence alignment editor and analysis

geography of the Eurasian beaver

Castor fi ber

program for Windows 95/98/NT. Nucleic

L. Mol Ecol 14(12):3843–3856

Acids Symp Ser 41:95–98

5. Rohland N, Hofreiter M (2007) Ancient DNA

11. Kumar S, Tamura K, Nei M (2004) MEGA3:

extraction from bones and teeth. Nat Protoc

integrated software for molecular evolutionary

2(7):1756–1762

genetics analysis and sequence alignment. Brief

6. Meyer M, Kircher M (2010) Illumina sequenc—

Bioinform 5(2):150–163

ing library preparation for highly multiplexed 12. Kircher M, Kelso J (2010) High-throughput target capture and sequencing. Cold Spring

DNA sequencing—concepts and limitations.

Harb Protoc 2010(6):pdb.prot5448. Bioessays 32(6):524–536

doi: 10.1101/pdb.prot5448

13. Dohm JC et al (2008) Substantial biases in

7. Meyer M, Stenzel U, Hofreiter M (2008)

ultra-short read data sets from high-through—

Parallel tagged sequencing on the 454 plat—

put DNA sequencing. Nucleic Acids Res

form. Nat Protoc 3(2):267–278

36(16):e105

sdfsdf

Analysis of High-Throughput Ancient DNA Sequencing Data Martin Kircher

Abstract

Advances in sequencing technologies have dramatically changed the fi eld of ancient DNA (aDNA). It is now possible to generate an enormous quantity of aDNA sequence data both rapidly and inexpensively. As aDNA sequences are generally short in length, damaged, and at low copy number relative to coextracted environmental DNA, high-throughput approaches offer a tremendous advantage over traditional sequencing approaches in that they enable a complete characterization of an aDNA extract. However, the particular qualities of aDNA also present specifi c limitations that require careful consideration in data analysis. For example, results of high-throughout analyses of aDNA libraries may include chimeric sequences, sequencing error and artifacts, damage, and alignment ambiguities due to the short read lengths. Here, I describe typical primary data analysis workfl ows for high-throughput aDNA sequencing experiments, including (1) separation of individual samples in multiplex experiments; (2) removal of protocol-specifi c library artifacts; (3) trimming adapter sequences and merging paired-end sequencing data; (4) base quality score fi ltering or quality score propagation during data analysis; (5) identifi cation of endogenous molecules from an environmental background; (6) quantifi cation of contamination from other DNA sources; and (7) removal of clonal amplifi cation products or the compilation of a consensus from clonal amplifi cation products, and their exploitation for estimation of library complexity.

Key words:

High-throughput sequencing

,

Next-generation sequencing

,

Illumina/Solexa ,

454 ,

SOLiD-barcode ,

Sample index

,

Adapters ,

Chimeric sequences

,

Quality scores

,

Endogenous DNA

,

Contamination , Ancient DNA

1. Introduction

The advent of high-throughput sequencing (HTS) technologies has dramatically changed the scope of ancient DNA (aDNA)

research. Beginning with Roche’s 454 instrument in 2005

( 1 )

, and quickly followed by technologies from Illumina

( 2

) , Life technologies

( 3

) and other companies

( 4– 6 )

, it is now possible to generate gigabases of sequence data within only hours or days. Shotgun

sequencing of aDNA extracts ( 7– 9 )

or aDNA libraries that have Beth Shapiro and Michael Hofreiter (eds.),

Ancient DNA: Methods and Protocols

, Methods in Molecular Biology, vol. 840, DOI 10.1007/978-1-61779-516-9_23, © Springer Science+Business Media, LLC 2012

197

198

M. Kircher

been enriched for specifi c loci

( 10– 13 )

provide a new window into preserved genetic material. For example, the fi rst high coverage mitochondrial genomes

( 14– 16 )

made it possible to characterize DNA preser

vation, contamination, and damage

( 17– 19

) to an

extent that had not been achieved previously. As the cost of sequencing continues to decrease

( 20, 21

) , it has become feasible to analyze entire genomes of ancient samples

( 7– 9, 22

) , including those for which the endogenous DNA makes up only a very small percentage of the total DNA extracted

( 8

) .

While the application of HTS to aDNA research is promising, the consequent increase in the amount of sequence data produced presents another challenge: effi cient and reliable data postprocessing.

Rather than aligning individual sequencing reads, millions of short reads, generally between ~36 and 300 nucleotides (nt) in length, must be analyzed. The highly fragmented nature of aDNA is ideal for such short-read technologies. However, other characteristics of aDNA extracts, including postmortem damage, the presence of coextracted DNA from environmental and other contaminants, and, often, a large evolutionary distance between the ancient sample and its closest modern refer

ence sequence ( 23

) , can be problematic. These and various platform-specifi c problems can lead to substantial variation in run quality, high error rates, and adapter/chimera sequences, all of which confound assembly and analysis

( 20, 24, 25, 26

) .

Here, I outline typical primary data analysis workfl ows for aDNA experiments using HTS. I describe seven specifi c bioinformatics workfl ows: (1) separation of individual samples in multiplex experiments; (2) removal of protocol-specifi c library artifacts; (3) trimming adapter sequences and merging paired-end sequencing data; (4) base quality score fi ltering or quality score propagation during data analysis; (5) identifi cation of endogenous molecules from an environmental background; (6) quantifi cation of contamination from other DNA sources; and (7) removal of clonal amplifi cation products and/or the compilation of a consensus from clonal amplifi cation products, and their exploitation for estimating library complexity.

The workfl ows described below assume a paired-end Illumina Genome Analyzer data set, e.g., see refs.

( 8, 11,

27, 28 )

. However, the instructions provided should apply to most types of HTS data sets generated from aDNA libraries.

2. Materials

2.1. Output Files from

the Sequencing

Each HTS platform, and, often, different versions of the same plat-

Platform

form, produces slightly different output fi les. Non-vendor software packages are available that claim to improve the data quality of the original output fi le

( 29– 33

) , and these, too, may produce a different type of output fi le. To simplify presentation of a bioinformatics workfl ow that is generalizable across platforms, I assume that the

23 Analysis of High-Throughput Ancient DNA Sequencing Data 199

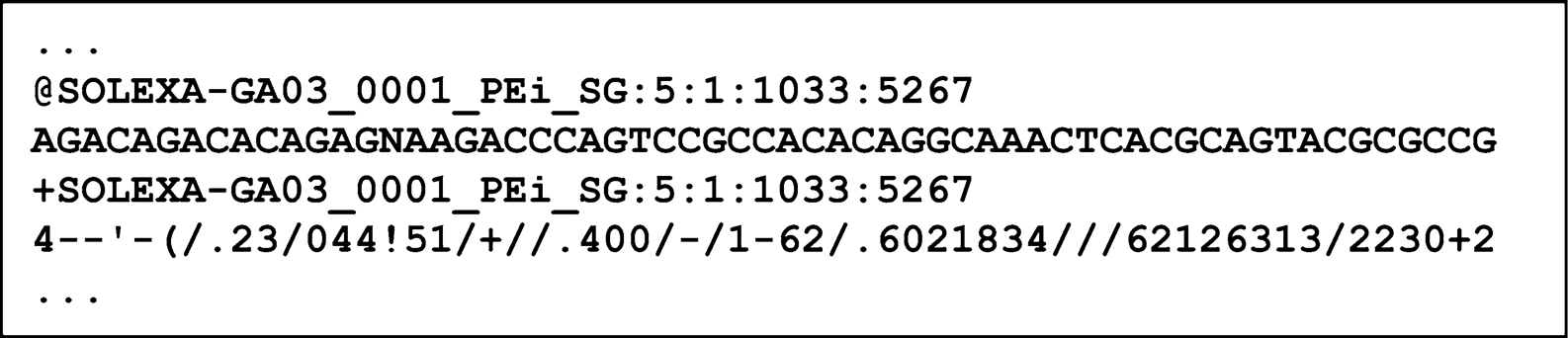

Fig. 1. A typical FastQ File. A FastQ fi le begins with “@” followed by a unique sequence identifi er (these are platform specifi c; shown is an Illumina Genome Analyzer read ID providing run ID, lane, tile, and

X

–

Y

-coordinates). The next line contains the sequence. The third line begins with “+” and can be followed again by the complete read identifi er. The fourth line contains the quality scores. This example encodes quality scores using the Sanger standard ( see Note 3 ) with ASCII characters from 33

to 127 encoding base qualities in PHRED-scale between 0 and 94 (e.g., “4” (ASCII 52) corresponds to PHRED quality score 19

and thus an error likelihood of 1.26%, while “-” (ASCII 45) corresponds to PHRED 12 and an error probability of 6.31%).

user will begin with a fi le of nucleotide sequence data with a quality score associated with every base.

The most common fi le format used both in data exchange and as input for post-sequencing software is currently the FastQ format.

FastQ is an extension of the FASTA sequence format, where each sequence in the fi le is associated with an identifying tag and with an additional line for quality scor

es (see Fig. 1 ). Depending on which

HTS platform is used, it may be necessary to convert output fi les to FastQ format for further processing (see Notes 1 and 2).

Unfortunately, there is no universally accepted rule regarding how quality scores are encoded. It is generally recommended to follow the Sanger standard (one character per quality score with an offset of 33, see Note 3), as this is currently the most widely used format.

Always consult the documentation and default parameters of the software you use for specifi c requirements of the FastQ input fi les.

2.2. Hardware

Given the large amount of data generated by HTS, the FastQ sequence fi les can be very large: each may contain several million sequence reads, and 4 times as many lines. The effi cient processing of these gigabyte-sized text fi les requires access to computational resources that typically exceed normal desktop computers (minimum requirements: 4–8

cores, 16–32 GB of memory, ~500 GB disk space for intermediate and output fi les). Due to the large fi le sizes, it is advisable to store compressed versions of these fi les in order to reduce input/output operation bottlenecks on network and local fi le systems.

2.3. Software

Most software currently available for data processing runs on UNIX-based systems such as Linux and Mac OS, but may also

work in a Windows cygwin envir

onment ( 34 )

. Python, bash, or Perl scripts can be effi cient for linear extraction of information from text fi les and for writing to intermediate and output text fi les.

Available bioinfor

matics packages (e.g., bioperl ( 35

) and biopy-thon

( 36 )

) provide useful functions and data structures for working with FastQ and sequence data in general. Large amounts of 200

M. Kircher

sequencing data may, in some applications, create the need for more effi

cient and indexed data storage (e.g., bioHDF

Google’s BigTable

( 38 )

, Apache Hadoop

( 39 )

).

The example workfl ows provided below assume a Linux operating system, in which the necessary programs/scripts can be called from a central installation.

2.4. Programs

List of programs and scripts that will be required in the protocols

and Scripts

described below.

BWA (v0.5.8a)

http://bio-bwa.sourceforge.net

KeyAdapterTrimFastQ_cc,

QualityFilterFastQ.py,

SplitMerged2Bwa.py, ContTestBWA.