Data Mining (58 page)

Authors: Mehmed Kantardzic

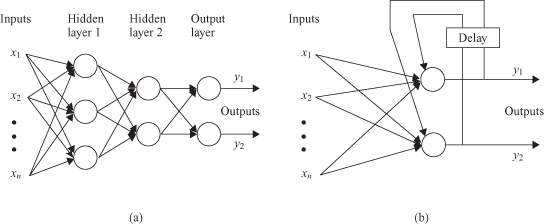

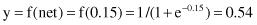

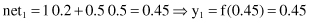

Now, when we introduce the basic components of an artificial neuron and its functionality, we can analyze all the processing phases in a single neuron. For example, for the neuron with three inputs and one output, the corresponding input values, weight factors, and bias are given in Figure

7.2

a. It is necessary to find the output y for different activation functions such as symmetrical hard limit, saturating linear, and log-sigmoid.

1.

Symmetrical hard limit

2.

Saturating linear

3.

Log-sigmoid

Figure 7.2.

Examples of artificial neurons and their interconnections. (a) A single node; (b) three interconnected nodes.

The basic principles of computation for one node may be extended for an ANN with several nodes even if they are in different layers, as given in Figure

7.2

b. Suppose that for the given configuration of three nodes all bias values are equal to 0 and activation functions for all nodes are symmetric saturating linear. What is the final output y

3

from the node 3?

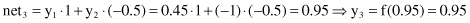

The processing of input data is layered. In the first step, the neural network performs the computation for nodes 1 and 2 that are in the first layer:

Outputs y

1

and y

2

from the first-layer nodes are inputs for node 3 in the second layer:

As we can see from the previous examples, the processing steps at the node level are very simple. In highly connected networks of artificial neurons, computational tasks are multiplied with an increase in the number of nodes. The complexity of processing depends on the ANN architecture.

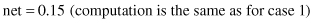

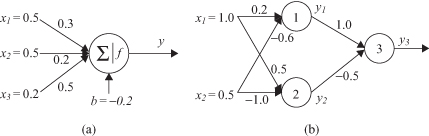

7.2 ARCHITECTURES OF ANNS

The architecture of an ANN is defined by the characteristics of a node and the characteristics of the node’s connectivity in the network. The basic characteristics of a single node have been given in a previous section and in this section the parameters of connectivity will be introduced. Typically, network architecture is specified by the number of inputs to the network, the number of outputs, the total number of elementary nodes that are usually equal processing elements for the entire network, and their organization and interconnections. Neural networks are generally classified into two categories on the basis of the type of interconnections:

feedforward

and

recurrent

.

The network is

feedforward

if the processing propagates from the input side to the output side unanimously, without any loops or feedbacks. In a layered representation of the feedforward neural network, there are no links between nodes in the same layer; outputs of nodes in a specific layer are always connected as inputs to nodes in succeeding layers. This representation is preferred because of its modularity, that is, nodes in the same layer have the same functionality or generate the same level of abstraction about input vectors. If there is a feedback link that forms a circular path in a network (usually with a delay element as a synchronization component), then the network is

recurrent

. Examples of ANNs belonging to both classes are given in Figure

7.3

.

Figure 7.3.

Typical architectures of artificial neural networks. (a) Feedforward network; (b) recurrent network.